Synthetic viruses could be generated through the misuse of artificial intelligence and potentially spark pandemics, a former Google executive and AI expert has warned. Google DeepMind co-founder Mustafa Suleyman expressed concern that the use of AI to engineer pathogens to cause more harm may lead to a scenario like a pandemic. “The darkest scenario is that people will experiment with pathogens, engineered synthetic pathogens that might end up accidentally or intentionally being more transmissible or more lethal,” he said in a recent episode of a podcast. Similar to how there are restrictions in place to prevent people from easily accessing pathogenic microbes like anthrax, Mr Suleyman has called for the means to restrict access to advanced AI technology and software that runs such models. “That’s where we need containment. We have to limit access to the tools and the know-how to carry out that kind of experimentation,” he said in The Diary of a CEO podcast. “We can’t let just anyone have access to them. We need to limit who can use the AI software, the cloud systems, and even some of the biological material,” the Google DeepMind co-founder said. “And of course on the biology side it means restricting access to some of the substances,” he said, adding that AI development needs to be approached with a “precautionary principle”. Mr Suleyman’s statements echo concerns raised in a recent study that even undergraduates with no relevant background in biology can detail suggestions for bio-weapons from AI systems. Researchers, including those from the Massachusetts Institute of Technology, found chatbots can suggest “four potential pandemic pathogens” within an hour and explain how they can be generated from synthetic DNA. The research found chatbots also “supplied the names of DNA synthesis companies unlikely to screen orders, identified detailed protocols and how to troubleshoot them, and recommended that anyone lacking the skills to perform reverse genetics engage a core facility or contract research organization”. Such large language models (LLMs), like ChatGPT, “will make pandemic-class agents widely accessible as soon as they are credibly identified, even to people with little or no laboratory training,” the study said. The study, whose authors included MIT bio risk expert Kevin Esvelt, called for “non-proliferation measures”. Such measures could include “pre-release evaluations of LLMs by third parties, curating training datasets to remove harmful concepts, and verifiably screening all DNA generated by synthesis providers or used by contract research organizations and robotic ‘cloud laboratories’ to engineer organisms or viruses”. Read More China’s ‘government-approved’ AI chatbot says Taiwan invasion is likely Government urged to address AI ‘risks’ to avoid ‘spooking’ public Scientists give verdict on Harvard professor’s claim of finding materials in sea from outside Solar System Google boss says he wants to make people ‘shrug’ Why is Elon Musk obsessed with the letter X? Elon Musk ‘borrowed $1bn from SpaceX’ at same time as Twitter acquisition

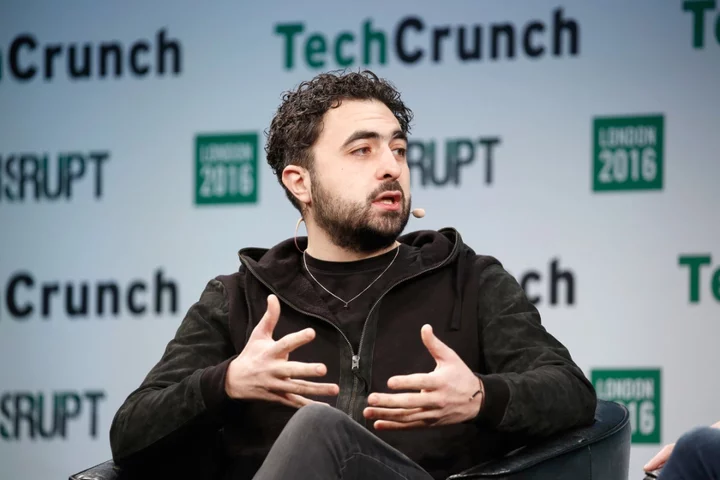

Synthetic viruses could be generated through the misuse of artificial intelligence and potentially spark pandemics, a former Google executive and AI expert has warned.

Google DeepMind co-founder Mustafa Suleyman expressed concern that the use of AI to engineer pathogens to cause more harm may lead to a scenario like a pandemic.

“The darkest scenario is that people will experiment with pathogens, engineered synthetic pathogens that might end up accidentally or intentionally being more transmissible or more lethal,” he said in a recent episode of a podcast.

Similar to how there are restrictions in place to prevent people from easily accessing pathogenic microbes like anthrax, Mr Suleyman has called for the means to restrict access to advanced AI technology and software that runs such models.

“That’s where we need containment. We have to limit access to the tools and the know-how to carry out that kind of experimentation,” he said in The Diary of a CEO podcast.

“We can’t let just anyone have access to them. We need to limit who can use the AI software, the cloud systems, and even some of the biological material,” the Google DeepMind co-founder said.

“And of course on the biology side it means restricting access to some of the substances,” he said, adding that AI development needs to be approached with a “precautionary principle”.

Mr Suleyman’s statements echo concerns raised in a recent study that even undergraduates with no relevant background in biology can detail suggestions for bio-weapons from AI systems.

Researchers, including those from the Massachusetts Institute of Technology, found chatbots can suggest “four potential pandemic pathogens” within an hour and explain how they can be generated from synthetic DNA.

The research found chatbots also “supplied the names of DNA synthesis companies unlikely to screen orders, identified detailed protocols and how to troubleshoot them, and recommended that anyone lacking the skills to perform reverse genetics engage a core facility or contract research organization”.

Such large language models (LLMs), like ChatGPT, “will make pandemic-class agents widely accessible as soon as they are credibly identified, even to people with little or no laboratory training,” the study said.

The study, whose authors included MIT bio risk expert Kevin Esvelt, called for “non-proliferation measures”.

Such measures could include “pre-release evaluations of LLMs by third parties, curating training datasets to remove harmful concepts, and verifiably screening all DNA generated by synthesis providers or used by contract research organizations and robotic ‘cloud laboratories’ to engineer organisms or viruses”.

Read More

China’s ‘government-approved’ AI chatbot says Taiwan invasion is likely

Government urged to address AI ‘risks’ to avoid ‘spooking’ public

Scientists give verdict on Harvard professor’s claim of finding materials in sea from outside Solar System

Google boss says he wants to make people ‘shrug’

Why is Elon Musk obsessed with the letter X?

Elon Musk ‘borrowed $1bn from SpaceX’ at same time as Twitter acquisition