TAIPEI—In 2022, Intel tipped its concept of a VPU, a new silicon component for its coming “Meteor Lake” processors. At an invite-only event in Taipei, the CPU giant demonstrated the first operating Meteor Lake processor, sharing a few more details about these next-gen chips, and talking up their abilities in the hottest area going today: AI. The VPU will be central to that.

John Rayfield, Intel’s vice president and general manager of client AI, met with a select group of journalists the weekend before Computex to run a few demos with the first VPU-equipped Meteor Lake chip to break cover. The exact Meteor Lake chip model, and its detailed speeds and feeds, were not shared. What was made clear, however, is that Meteor Lake will originate in the mobile market. That's because the new VPU module is all about power efficiency and putting demanding AI-related tasks onto this new processing component.

What Is Meteor Lake?

Intel's next generation of processors has been dubbed with this latest “Lake” code name, and Intel laid out a few of its principles around it in the demo. The preceding generations, Alder Lake (12th Gen Core) and Raptor Lake (13th Gen Core), emphasized performance through their hybrid design, with new Performance cores (P-cores) and Efficient cores (E-core) on the die. These generations saw increases in IPC, frequency, and performance per watt.

In the course of development, Intel did lots of work with Microsoft on making sure its processors would shift tasks to the proper cores, which manifested itself within Windows 11 and in Intel's Thread Director tool. Taking things further, Meteor Lake will emphasize, from the outset, power efficiency via a combination of new process technology and the addition of the VPU in Meteor Lake’s new, modular chip design, employing what are loosely referred to as “chiplets” or "tiles."

Getting software to play nice with a new processor component is the trick, of course, but the work with Alder and Raptor Lake means that there is a big app ecosystem that can get brought along; the ISV support is in place, and, according to Rayfield, Intel's strength is in the vast base of x86 applications.

Part of what is making possible the migration to the VPU is the fruit of Intel's Foveros tech (much more at the link) and the acquisition in 2016 of chip maker Movidius. Foveros, in the simplest terms, employs a 3D stacking technology that allows chip modules, or chiplets, to be layered, rather than laid out side by side. In the case of Meteor Lake, one of these modules will be the VPU, which the chip giant says will be the secret sauce in this generation.

The Meteor Lake Basics: Early Days

Intel did not reveal anything yet about individual Meteor Lake chips. As noted last year in a presentation at the Hot Chips conference, Meteor Lake will be produced on the Intel 4 process (in contrast to the Intel 7 used by Alder and Raptor Lake). The following "Arrow Lake" generation will be on Intel's 20A process. A reminder: The number after “Intel” in its process names no longer corresponds to the nanometer size in the process technology.

In ramping up Meteor Lake on Intel 4, the company is emphasizing next-generation power management and hyper-efficiency. Meteor Lake will also feature Intel's latest revision of its on-chip graphics, which will be based on Intel Arc, and in this case, Arc’s “Alchemist” architecture. The Arc integration will bring with it support for key technologies like DirectX 12 Ultimate, XeSS (supersampling), and ray tracing in a low-power format. Intel representatives would not share greater detail around the on-chip Arc, but did insist the graphics acceleration will be “a lot better” than existing integrated graphics (IGP) solutions from Intel. The Arc IGP will not be able to compare to Intel’s Arc desktop cards in terms of raw performance, due to the very different power envelope, but it will be the same design being dropped on as a chiplet, operating at lower thermals.

The VPU, though, is poised to be the biggest development here by far, tasked with local inferencing on the PC. Rayfield went as far as to emphasize that this is a watershed moment in the PC space, with the integration of efficient local client AI processing and what that will make possible. “We're turning this corner in PCs where the interface is going to radically change,” he said. ”The UI will change more in the next five years than it has in the last 20...in a good way.”

VPU Cares? Why Intel's New Tiles Matter

Intel sees itself positioned ideally for leveraging AI and setting the table for useful end-user experiences as the technology evolves. Rayfield pointed out, for one, that latency is a problem with AI when AI is integrated into a user interface. Based on past computing experiences, the user expects instant responses, which cloud-based solutions simply can’t match. There are also fundamental challenges around the cost of scaling AI applications like ChatGPT to many millions of PC users. It’s simply cost-prohibitive, versus putting some of that processing load on the client side. Doing some of the work locally allows for massive distributed scaling, better control over privacy (your data stays local), and low latency (the compute is happening on your device).

How does that actually play out in current computing terms? AI today is used in client platforms for things like real-time background replacement and blurring in video calls, and on-the-fly noise reduction. These tasks use inference models that work on CPU and GPU; as effects get bigger and user expectations get higher, the compute issues become more demanding, and power usage can become an issue, too. That said, the more prominent and identifiable forms of AI taking the world by storm, like ChatGPT and Stable Diffusion, are cloud-based. Rayfield cited some stats suggesting a 50-fold increase in compute-power demands from 2021 to 2023 for dynamic noise suppression, and even more enormous gains in demands for generative AI work based on large language models (LLMs), as an example Microsoft's Co-Pilot. These skyrocketing demands mean lots of potential for offloading some of that need onto local inferencing at low power levels.

The VPU that is arriving as a tile on Meteor Lake is a neural accelerator. The CPU and GPU will still get their own tasks, and this will remain a heterogeneous platform, but there are major advantages to putting the load on the VPU for heavy AI tasks. That said, some smaller AI-related tasks will remain handled by the CPU portion, on a case-by-case basis, if it's not worth pushing them through the device driver.

While Intel’s foundational work with Microsoft over the last few years will be key, the chip maker is also seeding efforts in a variety of channels. Take, for example, ONNX, which is an AI open-source container format that Intel is contributing to; the company has also made efforts to expose the low-power acceleration of VPUs to Web-based apps. The company has also contributed to efforts with key utilities like Open Broadcaster System (OBS), Audacity, and Blender to create plug-ins that will let those seminal pieces of software take advantage of the VPU.

“We're turning this corner in PCs where the interface is going to radically change....The UI will change more in the next five years than it has in the last 20.” - John Rayfield, Intel’s vice president and general manager of client AIIn addition, the company has its own OpenVINO tool stack for AI, centered around the mapping of workloads to different tiles and optimizing the workloads for the different engines. Intel also works with a host of ISVs who will be able to use the VPU to help with general AI experiences. Today, examples of these include applying background blur without corrupting the foreground objects, which is actually quite computationally intensive to do well.

This, combined with the open-source efforts, is a necessary piece of the puzzle. “More than 75% of it is a software problem,” Rayfield said. “We're primarily a hardware company, but the problem is primarily in the software.”

Lakes Alive: The Meteor Lake Demo

So, as for the demo shown on site at Computex: It was very early hardware, and very early days. Intel worked with one of its system OEMs to produce a demo mobile (laptop) platform. The system maker’s name was tastefully concealed by Intel stickers. And in true demo format, it took some effort to work with. For example, the keyboard on the early-hardware laptop was not operational.

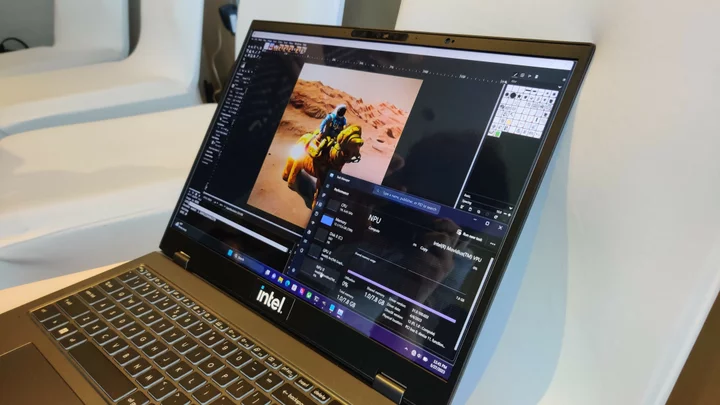

However, Rayfield and the rest of the Intel group demonstrated the use of the Stable Diffusion AI image generator, locally run, with a combination of the CPU, GPU, and VPU, inside the open-source image editor GIMP. It was run using an OpenVINO plug-in.

The image-generation tasks were run on the system proper, with the laptop not connected to the internet at all. The same seeds in Stable Diffusion were kept consistent run to run. Here you can see the Stable Diffusion results of the demo prompt, which was "an astronaut riding a horse on the moon."

With Windows 11’s Task Manager live, it was clear that the VPU neural engine (“NPU” in the image here) was being hit during the course of the run. (The image below is just for illustration of the VPU/NPU within Task Manager; the load graph is flatlined since the demo was over when this was shot.) As Rayfield explained, in Meteor Lake, the processor package as a whole will comprise four tiles, each being an SOC, and one of them the VPU.

Ultimately, we will likely see Meteor Lake in laptops first, specifically in the mobile thin-and-light segment. It's in these kind of PCs that a VPU can assist most with power efficiency, as AI functions are integrated into coming software and plug-ins. Eventually, however, the VPU will scale to all Meteor Lake segments, and all SKUs in the Meteor Lake line will integrate a VPU tile.

Intel says it intends to ship Meteor Lake by the end of 2023, and more details will roll out over the summer in the run-up to release. Stay tuned!