Google Bard can now solve logic-based math and word problems with 30% greater accuracy, the company announced this week. It's an impressive accomplishment, but the news highlights Google's ability to measure its model's weaknesses, and its choice to keep that data from the model's "trainers"—the public.

How accurate were Bard's math answers when the AI chatbot debuted? How much better could they be? In what other subjects might it be ill-advised to take what Bard says as fact?

"Our new method allows Bard to generate and execute code to boost its reasoning and math abilities," Jack Krawczyk, product lead for Bard, and Amarnag Subramanya, VP of engineering, wrote in a Wednesday blog post. "So far, we've seen this method improve the accuracy of Bard’s responses to computation-based word and math problems in our internal challenge datasets by approximately 30%."

The first part of that announcement—that PaLM 2, the model Bard runs on, can now write and run its own code—may conjure visions of a future computer takeover, but let's put that aside for a second. It's the second half of the announcement, where Google admits its model was previously sub-par at math, that got my attention.

I've spent time trying to evaluate the answers produced by ChatGPT, Bard, and Bing. When we review a cell phone or computer at PCMag, we ask questions like: "What's the quality of the camera on the latest iPhone? How much storage does HP's laptop offer?" But the chatbot industry is so nascent it has not rallied around standardized specs. How do you evaluate these black box systems when we know very little about them?

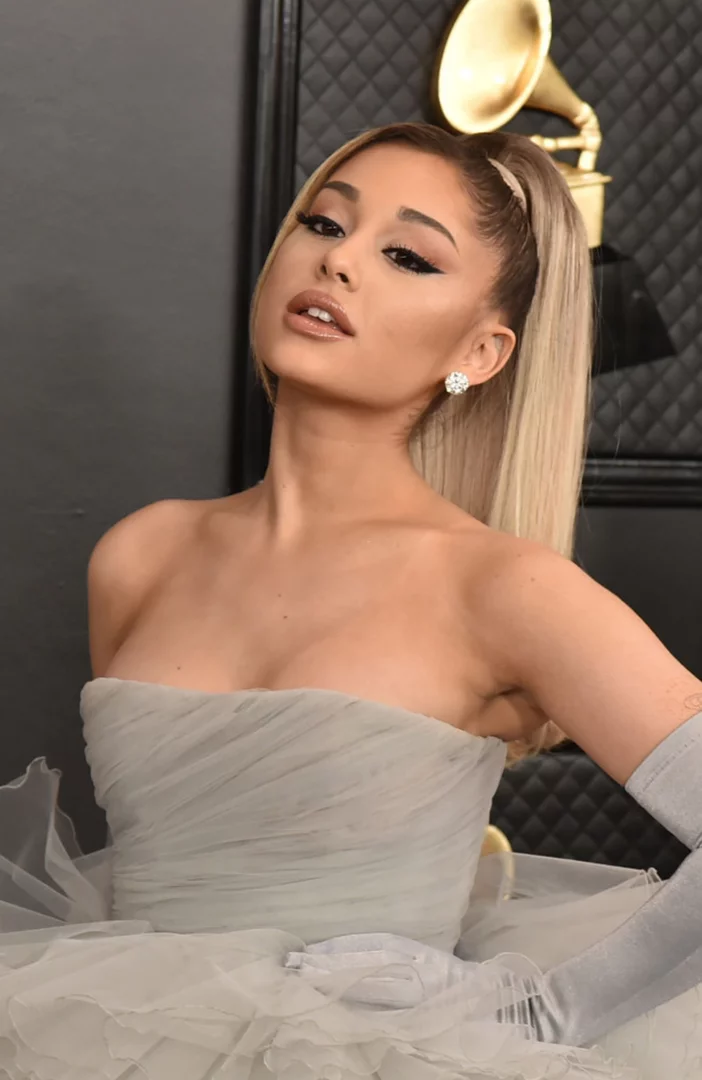

Bing's AI search includes a list of citations and sentences attributed to each.Accuracy is arguably the most important specification, and it's something all three of the major AI chatbots have struggled with. It would be helpful if they attached an accuracy or confidence score to responses—something like "76% Accuracy Estimate." ChatGPT and Bard may want to take a page from Bing on citing sources, too.

In the absence of information from the AI developers themselves, independent efforts have cropped up to measure accuracy. One tool, created by a group of students and faculty at UC Berkeley in April, collects thousands of opinions on the quality of chatbot responses. It then assigns each model a rating using the Elo system, which the group says is "a widely-used rating system in chess and other competitive games."

Anyone can participate by joining their site's Chatbot Arena, where "a user can chat with two anonymous models side-by-side and vote for which one is better," the site says. By this method, ChatGPT's model ranks above Bard's (see Leaderboard). It's an interesting effort that can gather a wide range of opinions, but it's still surface-level.

Squash Misinformation or Protect Trade Secrets?

Every company keeps certain metrics close to the vest, but the threat of misinformation makes this more than a simple case of protecting trade secrets.

As Kent Walker, president of global affairs and chief legal officer for Google and Alphabet, noted last month, "if not developed and deployed responsibly, AI systems could amplify current societal issues, such as misinformation. And without trust and confidence in AI systems, businesses and consumers will be hesitant to adopt AI, limiting their opportunity to capture AI’s benefits."

These risks are likely one reason Google has referred to Bard as an experiment since it launched in February, a badge it still wears today. But it increasingly feels far from experimental. Last month, Google announced it will eventually update the main Google.com search page to feature an AI-generated paragraph, a beta version of which is available for testing now.

Current Google Bard interfaceGoogle, like OpenAI, is incentivized to get as many people using Bard as possible. The more users, the better it gets, through a concept called reinforcement learning with human feedback (RLHF). But without legitimate information on the model's performance, how useful is that feedback? Early adopters may get a gut feeling causing them to question the accuracy of an answer, but have nothing to go on besides a small disclaimer at the bottom of the page: "Bard may display inaccurate or offensive information that doesn’t represent Google’s views."

Publishing the accuracy of an AI-generated answer may seem like a radical shift from where we are today, but it reflects the information Google already privately measures; the public just isn't looped in. As Bard usage increases, it's time to get real about this so-called experiment.