One of the “godfathers” of artificial intelligence (AI) has said he feels “lost” as experts warned the technology could lead to the extinction of humanity.

Professor Yoshua Bengio told the BBC that all companies building AI products should be registered and people working on the technology should have ethical training.

It comes after dozens of experts put their name to a letter organised by the Centre for AI Safety, which warned that the technology could wipe out humanity and the risks should be treated with the same urgency as pandemics or nuclear war.

Prof Bengio said: “It is challenging, emotionally speaking, for people who are inside (the AI sector).

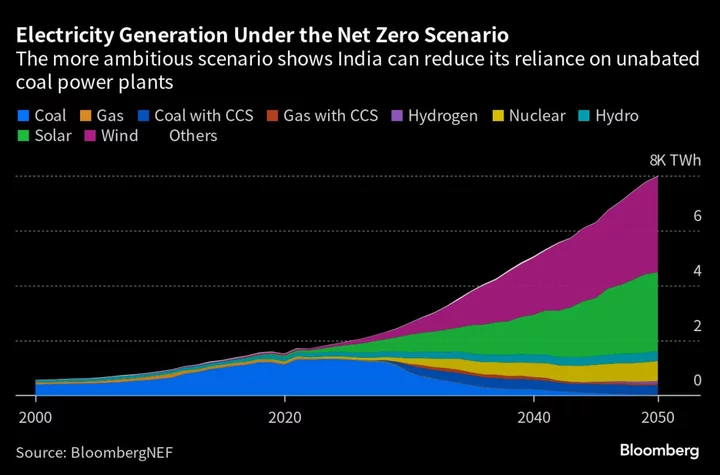

It's exactly like climate change. We've put a lot of carbon in the atmosphere. And it would be better if we hadn't, but let's see what we can do now

Professor Yoshua Bengio“You could say I feel lost. But you have to keep going and you have to engage, discuss, encourage others to think with you.”

Senior bosses at companies such as Google DeepMind and Anthropic signed the letter along with another pioneer of AI, Geoffrey Hinton, who resigned from his job at Google earlier this month, saying that in the wrong hands, AI could be used to to harm people and spell the end of humanity.

Experts had already been warning that the technology could take jobs from humans, but the new statement warns of a deeper concern, saying AI could be used to develop new chemical weapons and enhance aerial combat.

AI apps such as Midjourney and ChatGPT have gone viral on social media sites, with users posting fake images of celebrities and politicians, and students using ChatGPT and other “language learning models” to generate university-grade essays.

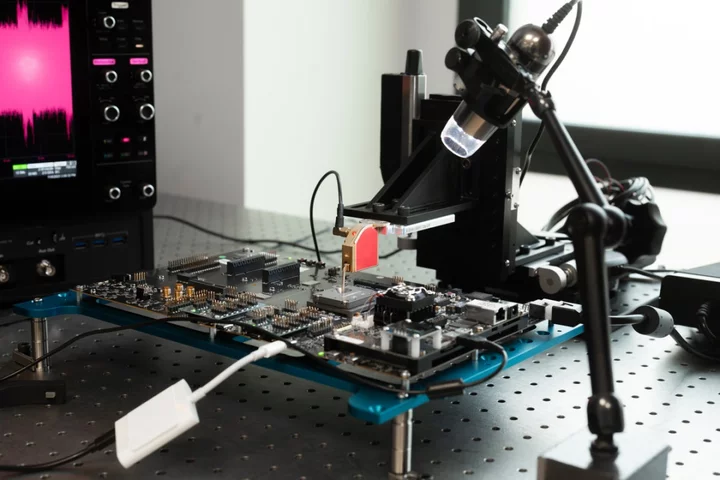

But AI can also perform life-saving tasks, such as algorithms analysing medical images like X-rays, scans and ultrasounds, helping doctors to identify and diagnose diseases such as cancer and heart conditions more accurately and quickly.

Last week Prime Minister Rishi Sunak spoke about the importance of ensuring the right “guard rails” are in place to protect against potential dangers, ranging from disinformation and national security to “existential threats”, while also driving innovation.

He retweeted the Centre for AI Safety’s statement on Wednesday, adding: “The government is looking very carefully at this. Last week I stressed to AI companies the importance of putting guardrails in place so development is safe and secure. But we need to work together. That’s why I raised it at the @G7 and will do so again when I visit the US.”

Prof Bengio told the BBC all companies building powerful AI products should be registered.

“Governments need to track what they’re doing, they need to be able to audit them, and that’s just the minimum thing we do for any other sector like building aeroplanes or cars or pharmaceuticals,” he said.

“We also need the people who are close to these systems to have a kind of certification… we need ethical training here. Computer scientists don’t usually get that, by the way.”

Prof Bengio said of AI’s current state: “It’s never too late to improve.

“It’s exactly like climate change. We’ve put a lot of carbon in the atmosphere. And it would be better if we hadn’t, but let’s see what we can do now.”

We don't quite know how to understand the absolute consequences of this technology

Professor Sir Nigel ShadboltOxford University expert Sir Nigel Shadbolt, chairman of the London-based Open Data Institute, told the BBC: “We have a huge amount of AI around us right now, which has become almost ubiquitous and unremarked. There’s software on our phones that recognise our voices, the ability to recognise faces.

“Actually, if we think about it, we recognise there are ethical dilemmas in just the use of those technologies. I think what’s different now though, with the so-called generative AI, things like ChatGPT, is that this is a system which can be specialised from the general to many, many particular tasks and the engineering is in some sense ahead of the science.

“We don’t quite know how to understand the absolute consequences of this technology, we all have in common a recognition that we need to innovate responsibly, that we need to think about the ethnical dimension, the values that these systems embody.

“We have to understand that AI is a huge force for good. We have to appreciate, not the very worst, (but) there are lots of existential challenges we face… our technologies are on a par with other things that might cut us short, whether it’s climate or other challenges we face.

“But it seems to me that if we do the thinking now, in advance, if we do take the steps that people like Yoshua is arguing for, that’s a good first step, it’s very good that we’ve got the field coming together to understand that this is a powerful technology that has a dark and a light side, it has a yin and a yang, and we need lots of voices in that debate.”

Read MoreCharity boss speaks out over ‘traumatic’ encounter with royal aide

Ukraine war’s heaviest fight rages in east - follow live

Cabinet approves Irish involvement in cyber-threat network

Trust and ethics considerations ‘have come too late’ on AI technology

Mitigating ‘extinction’ from AI should be ‘global priority’, experts say