Instagram and its parent company Meta is being asked to provide answers for yet another incident of alleged bias in the midst of thee Israel-Hamas conflict. After being accused of shadow banning pro-Palestinian posts, the app has been auto-translated Arabic words in Palestinian bios as "terrorist".

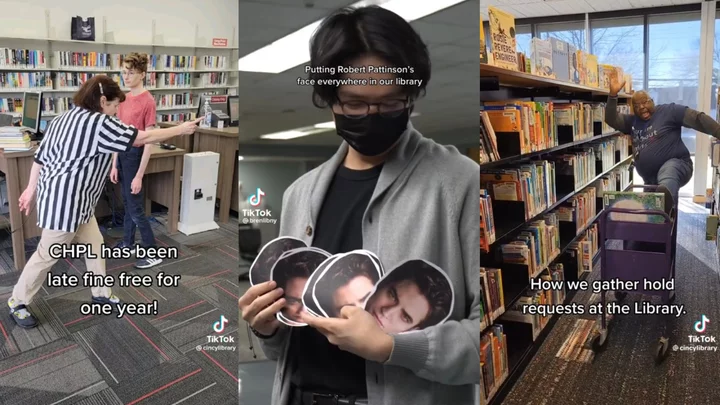

First reported by 404media, the issue appeared for some users who had the Palestinian flag (??), or the Arabic word "Alhamdulillah" (ٱلْحَمْدُ لِلَّٰهِ), which means "praise to Allah". TikTok user @ytkingkhan posted about the incident in relation to his Instagram bio. While ytkingkhan isn't Palestinian himself, he wanted to test the matter after a friend brought the issue to light. Upon pressing "See translation", his bio was translated to "Praise be to God, Palestinian terrorists are fighting for their freedom".

The TikTokker showing his Instagram bio, which displayed the word "Palestinian" and the flag emoji. Credit: TikTok / @ytkingkhan. Upon translation, the word "terrorist" was displayed. Credit: TikTok / @ytkingkhan.Meta apologized for the issue, saying that it has since been fixed. However, the company did not explain why this happened.

"We fixed a problem that briefly caused inappropriate Arabic translations in some of our products. We sincerely apologize that this happened," a spokesperson told 404media. Mashable has reached out for comment.

On X (formerly Twitter), users expressed frustration and outrage at the incident. One wrote "that's one hell of a 'glitch'", while another said "How is this in anyway justified??".

According to Instagram, translations are provided automatically.

SEE ALSO: People are accusing Instagram of shadowbanning content about PalestineIn response to continuous violence in the region, Meta has said it is closely monitoring its platforms and removing violent or disturbing content relating to the Israel-Hamas war. The tech giant said in a statement on Wednesday that "there is no truth to the suggestion that we are deliberately suppressing [voices]".

"We can make errors," read the statement, "and that is why we offer an appeals process for people to tell us when they think we have made the wrong decision, so we can look into it."

This isn't the first time Meta has been accused of deliberate suppression or bias. Since 2021, the company has been condemned for various incidents of censoring Palestinian voices on its platform, which the digital rights nonprofit Electronic Frontier Foundation (EFF) called "unprecedented" and "systemic".